Contact Us for a Demo

Breaking the Barriers to Productive Work | Part 3

In our previous blog, we introduced the problem of listening to 50 people and getting a prioritized list in rank order of preference, a process that would take at minimum a long day at an offsite. With the CrowdSmart system, this can be done in minutes at each individual’s leisure since it is asynchronous. The process supports and encourages co-creation and serendipitous discovery getting answers to questions you did not think to ask.

CrowdSmart solves the problem of a diversity of ideas by intelligently showing unique sample lists to participants and monitoring how individuals react. CrowdSmart’s learning algorithm builds hypotheses based on real-time results, similar to the work of a great facilitator. The sample list blends hypothesis-testing with floating new ideas to see if a new discovery emerges as important to the group. The system is learning a “citation ranking” while at the same time learning who are the knowledge influencers in the particular work group session. It selects ideas as different from each other as possible.

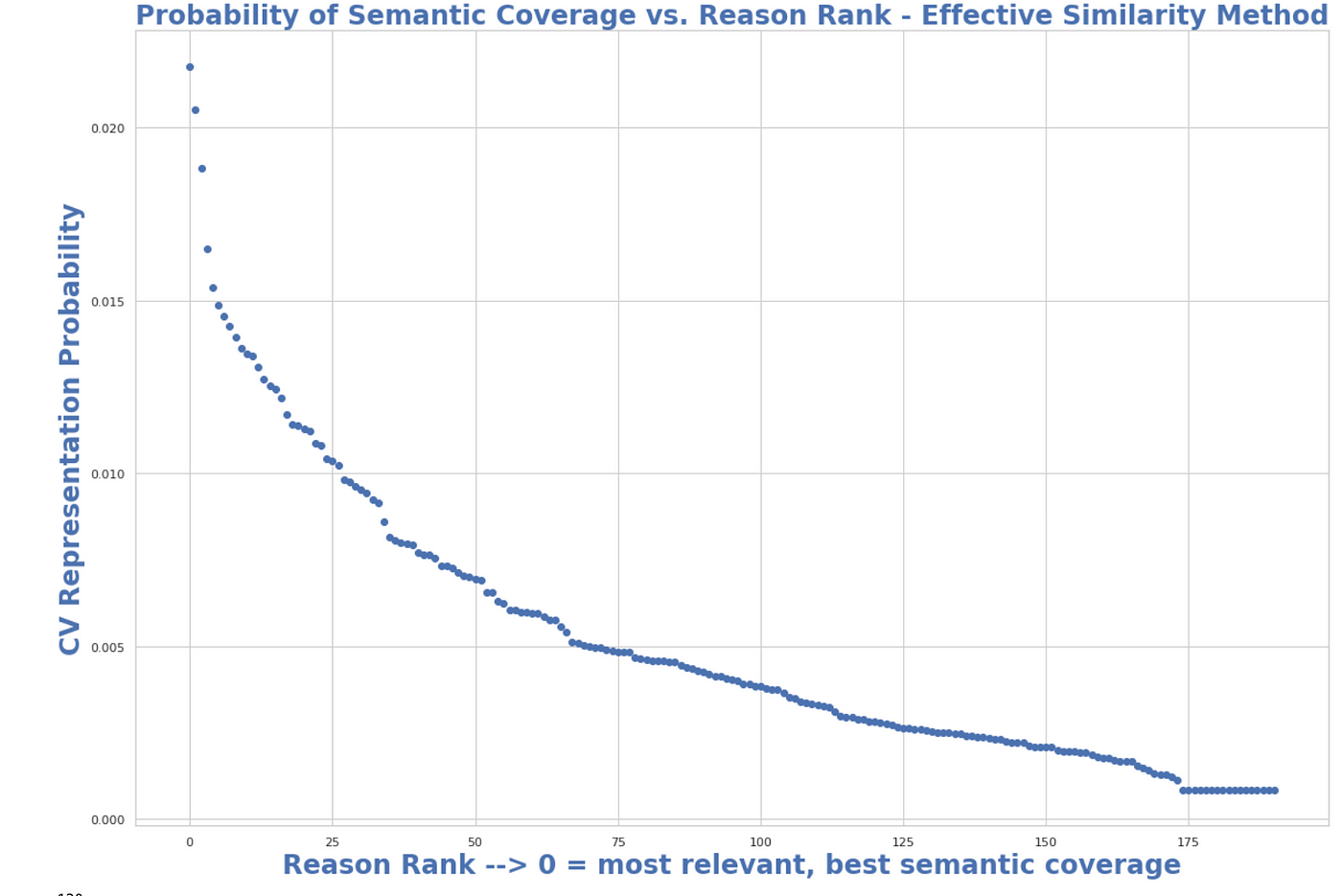

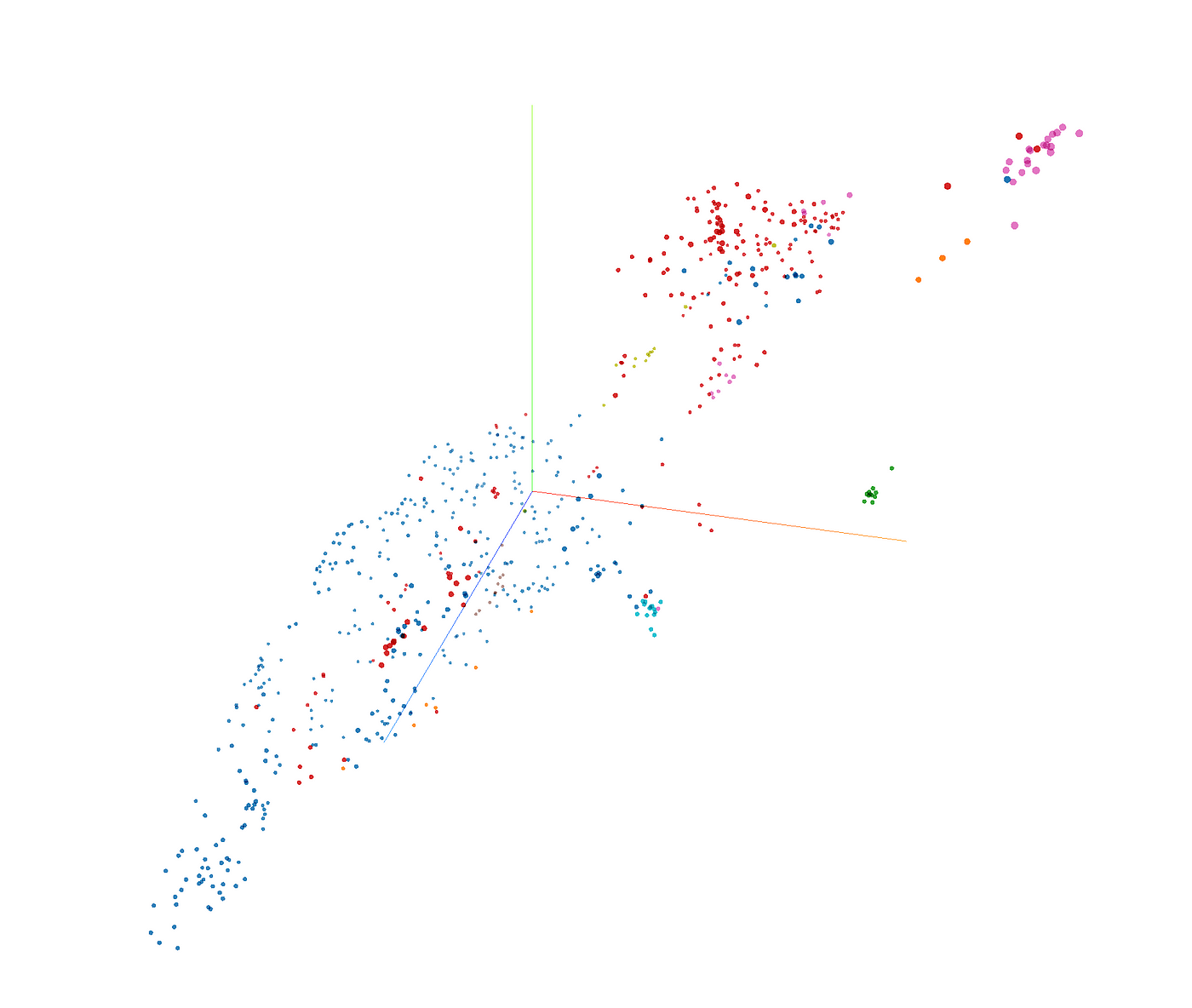

For the non-technical person, this is an even more reliable way of learning priorities than a human facilitator because it can listen more broadly and deeply with greater accuracy to groups of any size. You might recognize this as a Bayesian learning process for the technical person. The papers referenced show that the process mathematically converges to a ranked list with statistical accuracy. The plot in the figure demonstrates the work the system is doing by dynamically learning what is important and meaningful in a conversation:

Each data point is a sentence in the voice of its author. Each sentence is characterized by two metrics:

- The probability of relevance to the group that results from the sampling and prioritization process described above, and

- The probability of semantic coverage of the sentence. Specifically, what is the probability that this statement best covers the topic in question.

Collective voice analysis combines these two metrics to create a precise ranking that balances group-assessed relevance with semantic coverage of the topic. The data points to the left emerged as relevant and descriptive of the overall conversation. This type of distribution emerges from any collaborative discussion.

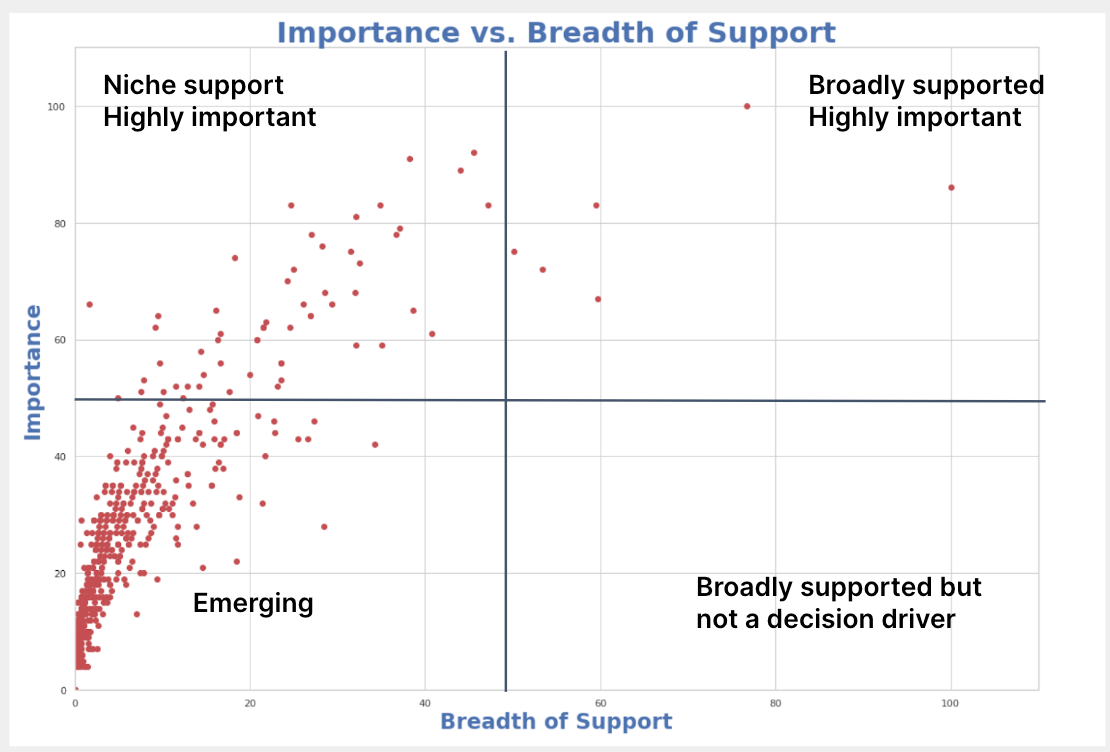

The following chart shows a distribution of reasons in terms of relative importance to the group vs. the breadth of support:

Without the relevance learning process, all reasons would be equally likely (the first graph is a horizontal line), and in the second distribution, all the data points would be at the origin in a cluster.

For most qualitative research on conversational data, all things are equally likely. Relevance learning applies preference learning (the law of comparative judgment) to collective reasoning. A few things emerge from the process as important and meaningful. The upper right-hand corner of the chart above is sometimes called the “no-brainer” or “action” quadrant. CrowdSmart learns priorities from virtual workgroups using a very simple easy-to-use customizable process.

A collective reasoning virtual workshop on the future of work will produce the average number of days in the office desired by the group and a ranked list of reasons in order of relative importance to the group. CrowdSmart uses collective voice analysis to automatically produce a representative voice that distills the essence of the conversation ranked in order of a combination of relevance and semantic coverage.

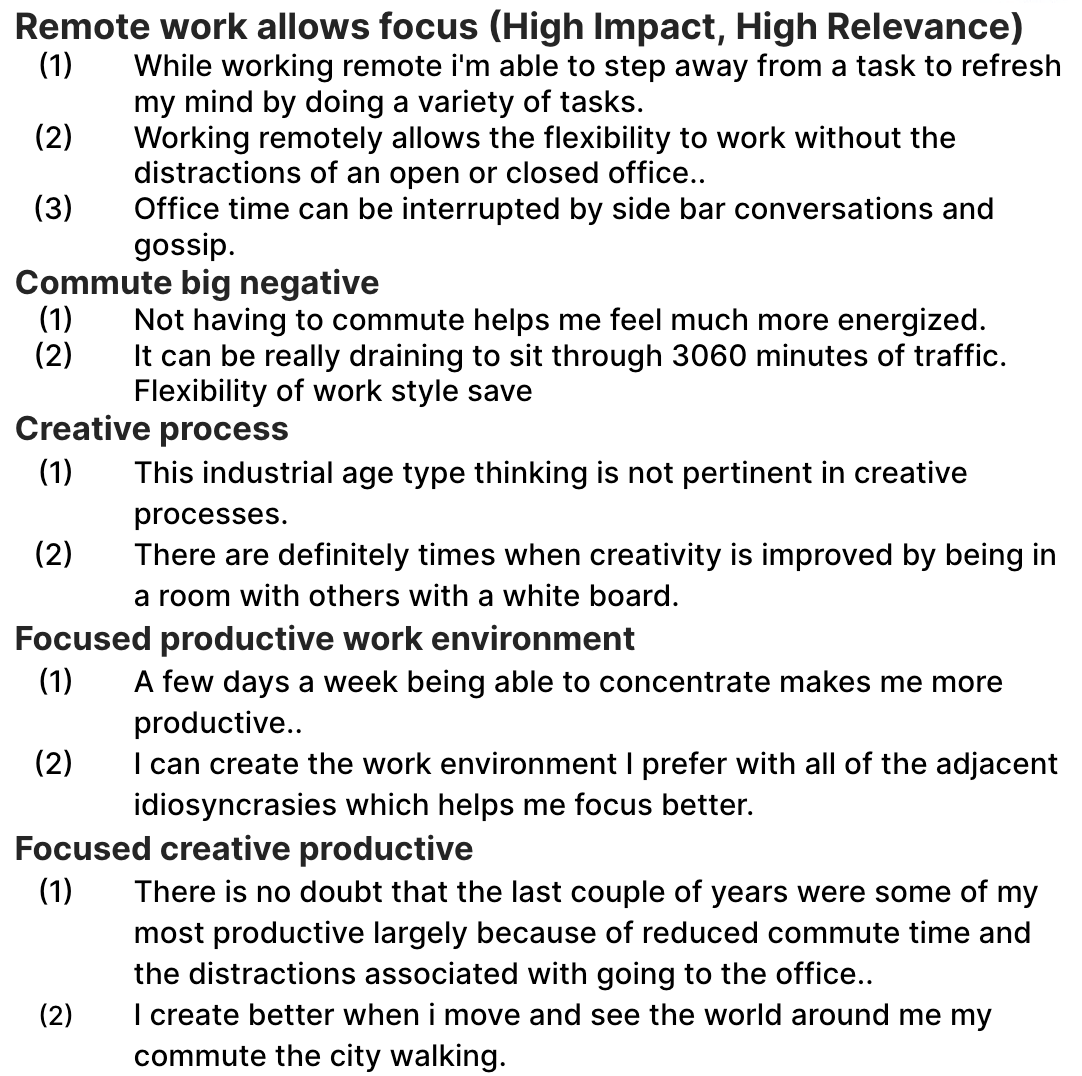

For more detail, the reasons for the score are analyzed into clusters, representing the landscape of collective thought driving the score. We use collective voice analysis to extract a label and an abstract narrative for each cluster. For example, the 36 participants produced 60 reasons in response to the question about work productivity and creativity that resulted in 5 collections as follows:

The data shown are from a small group and frankly not particularly exciting. The point of the exercise is to observe the ease with which it is possible to ask a simple question of a group of any size and learn what emerges from their interaction on the topic.

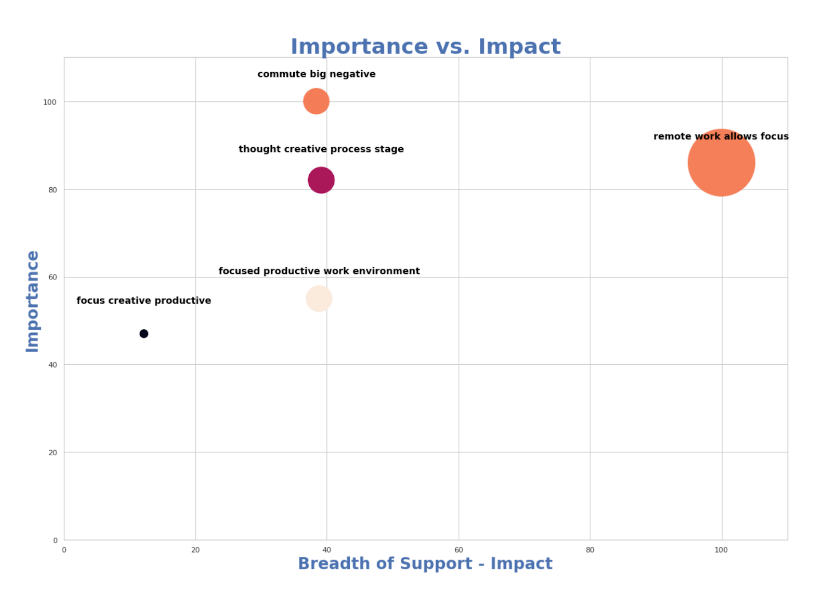

Combining automated theme learning with the importance and impact chart shown above generates group shared themes, ranked by relevance and breadth of support in the group:

We could just as easily use this question to understand the collective mindset of a 100,000-employee company, allowing everyone to co-create. There is no other way to reach out to a large group and engage in a discovery process with conclusive results that are statistically accurate.

Much of the capability is made possible with recent developments in Natural Language Processing (transformer models). The system turns the discussion into a geometric map of the collective mind as shown in the figure below. An image of a larger group’s collective mind shows a glimpse of the potential of turning collaborative discussions into explorable topologies of a collective thought process.

CrowdSmart provides a mechanism for dialogue at large. The only response required is an answer that responds to the group’s collective voice. Large group dialogue is now possible. Rather than a vague and unsatisfying “we hear you,” a specific response is possible. Communication in response to generous listening increases engagement. Specific responses create a channel for ongoing dialogue. Listening and engaging with the hearts and minds of the organization creates a new culture of communication. Never was that more important than now.

From discovery to prediction

Discovery of importance and relevance provides a foundation for making the right organizational decisions, but it doesn’t end there. Innovating through turbulent times requires investing in products, new ventures, buying companies, and organizational development initiatives. Diversity of perspective on any decision increases the probability of getting it right.

The original journey for building the technology discussed here focused on the hard problem of early-stage investing. When we founded CrowdSmart, we wanted to know if by assembling a unique and cognitively diverse group of angel investors and experts we could predict which startups would gain traction with investors for follow-on investment. We knew collective intelligence demonstrated superior prediction accuracy. For investment purposes, we wanted to know the reasoning behind the prediction. That led to the development of collective reasoning.

The results from a four-year initiative were significant. The process was >80% accurate and radically reduced bias (42% of the companies funded were female-led). The details are discussed in Applying collective reasoning to Investing.

By designing the collaborative work group agenda to include a decision rubric (e.g. how do you score the business, the team, strategic fit, etc), the process discussed here applies to capital deployment decisions. The system produces a probabilistic graphical model (a Bayesian Belief Network) of the prediction and an explanation of the reasoning that led to the decision.

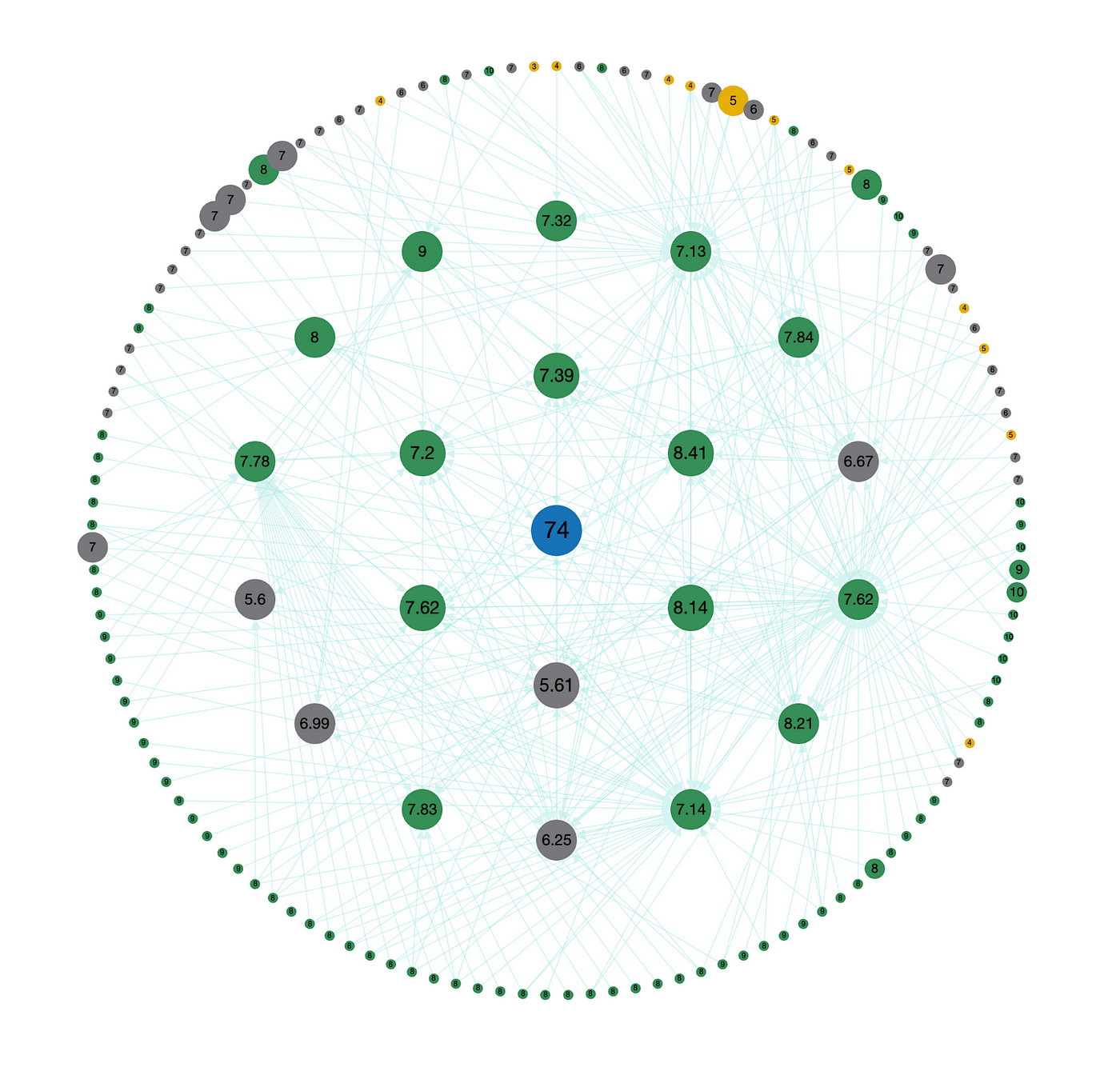

The figure below shows an example of an acquisition decision:

The outer circle is constructed of all the reasons for scores with the size of the dot driven by peer-reviewed relevance. Peer-supported evidence increases the influence value of the quantitative score. Reasons are clustered into themes that influence the specific decision elements scored. In this case, the questions included: assessment of the business, assessment of the team, assessment of strategic fit, assessment of ease of integration, and assessment of technology. At the center is the score representing the group’s prediction that this will be a successful acquisition.

Rethinking the cost of collaboration

With an AI facilitator, the cost of collaboration plummets. CrowdSmart creates an inversion, a new calculus of collaboration. An AI that can facilitate collaboration at scale asynchronously, recording every detail of the interaction and saving it in a knowledge model of the process, changes the game. In the new calculus, the cost of not collaborating introduces immense risk. Not co-creating on new products with influencers, customers, and prospects creates a substantial increase in the risk of failure. Not collaborating on strategic initiatives such as M&A or strategic partnerships increases the risk of failure. Not collaborating on the future of work and organizational programs and policies increases the risk of leading without anyone following you. Collective reasoning provides a way to collaborate at scale, opening new approaches to find common ground and making it possible to look around the corner by forecasting the outcomes of decisions.

(1) Patent #11366972 registered with four more pending

(2) Transforming Collaborative Decision Making with Collective Reasoning and Finding common ground: social collaboration based on collective intelligence and AI